+

+---

+

+**Documentation**: https://fastapi.tiangolo.com

+

+**Source Code**: https://github.com/tiangolo/fastapi

+

+---

+

+FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.6+ based on standard Python type hints.

+

+The key features are:

+

+* **Fast**: Very high performance, on par with **NodeJS** and **Go** (thanks to Starlette and Pydantic). [One of the fastest Python frameworks available](#performance).

+

+* **Fast to code**: Increase the speed to develop features by about 200% to 300%. *

+* **Fewer bugs**: Reduce about 40% of human (developer) induced errors. *

+* **Intuitive**: Great editor support. Completion everywhere. Less time debugging.

+* **Easy**: Designed to be easy to use and learn. Less time reading docs.

+* **Short**: Minimize code duplication. Multiple features from each parameter declaration. Fewer bugs.

+* **Robust**: Get production-ready code. With automatic interactive documentation.

+* **Standards-based**: Based on (and fully compatible with) the open standards for APIs: OpenAPI (previously known as Swagger) and JSON Schema.

+

+* estimation based on tests on an internal development team, building production applications.

+

+## Gold Sponsors

+

+

+

+

+

+

+

+

+Other sponsors

+

+## Opinions

+

+"_[...] I'm using **FastAPI** a ton these days. [...] I'm actually planning to use it for all of my team's **ML services at Microsoft**. Some of them are getting integrated into the core **Windows** product and some **Office** products._"

+

+

+

+---

+

+"_We adopted the **FastAPI** library to spawn a **REST** server that can be queried to obtain **predictions**. [for Ludwig]_"

+

+

Piero Molino, Yaroslav Dudin, and Sai Sumanth Miryala - Uber(ref)

+

+---

+

+"_**Netflix** is pleased to announce the open-source release of our **crisis management** orchestration framework: **Dispatch**! [built with **FastAPI**]_"

+

+

Kevin Glisson, Marc Vilanova, Forest Monsen - Netflix(ref)

+

+---

+

+"_I’m over the moon excited about **FastAPI**. It’s so fun!_"

+

+

+

+---

+

+"_Honestly, what you've built looks super solid and polished. In many ways, it's what I wanted **Hug** to be - it's really inspiring to see someone build that._"

+

+

+

+---

+

+"_If you're looking to learn one **modern framework** for building REST APIs, check out **FastAPI** [...] It's fast, easy to use and easy to learn [...]_"

+

+"_We've switched over to **FastAPI** for our **APIs** [...] I think you'll like it [...]_"

+

+

+

+---

+

+## **Typer**, the FastAPI of CLIs

+

+

+

+If you are building a CLI app to be used in the terminal instead of a web API, check out **Typer**.

+

+**Typer** is FastAPI's little sibling. And it's intended to be the **FastAPI of CLIs**. ⌨️ 🚀

+

+## Requirements

+

+Python 3.6+

+

+FastAPI stands on the shoulders of giants:

+

+* Starlette for the web parts.

+* Pydantic for the data parts.

+

+## Installation

+

+

+

+## Example

+

+### Create it

+

+* Create a file `main.py` with:

+

+```Python

+from typing import Optional

+

+from fastapi import FastAPI

+

+app = FastAPI()

+

+

+@app.get("/")

+def read_root():

+ return {"Hello": "World"}

+

+

+@app.get("/items/{item_id}")

+def read_item(item_id: int, q: Optional[str] = None):

+ return {"item_id": item_id, "q": q}

+```

+

+

+Or use async def...

+

+If your code uses `async` / `await`, use `async def`:

+

+```Python hl_lines="9 14"

+from typing import Optional

+

+from fastapi import FastAPI

+

+app = FastAPI()

+

+

+@app.get("/")

+async def read_root():

+ return {"Hello": "World"}

+

+

+@app.get("/items/{item_id}")

+async def read_item(item_id: int, q: Optional[str] = None):

+ return {"item_id": item_id, "q": q}

+```

+

+**Note**:

+

+If you don't know, check the _"In a hurry?"_ section about `async` and `await` in the docs.

+

+

+

+### Run it

+

+Run the server with:

+

+

+

+```console

+$ uvicorn main:app --reload

+

+INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

+INFO: Started reloader process [28720]

+INFO: Started server process [28722]

+INFO: Waiting for application startup.

+INFO: Application startup complete.

+```

+

+

+

+

+About the command uvicorn main:app --reload...

+

+The command `uvicorn main:app` refers to:

+

+* `main`: the file `main.py` (the Python "module").

+* `app`: the object created inside of `main.py` with the line `app = FastAPI()`.

+* `--reload`: make the server restart after code changes. Only do this for development.

+

+

+

+### Check it

+

+Open your browser at http://127.0.0.1:8000/items/5?q=somequery.

+

+You will see the JSON response as:

+

+```JSON

+{"item_id": 5, "q": "somequery"}

+```

+

+You already created an API that:

+

+* Receives HTTP requests in the _paths_ `/` and `/items/{item_id}`.

+* Both _paths_ take `GET` operations (also known as HTTP _methods_).

+* The _path_ `/items/{item_id}` has a _path parameter_ `item_id` that should be an `int`.

+* The _path_ `/items/{item_id}` has an optional `str` _query parameter_ `q`.

+

+### Interactive API docs

+

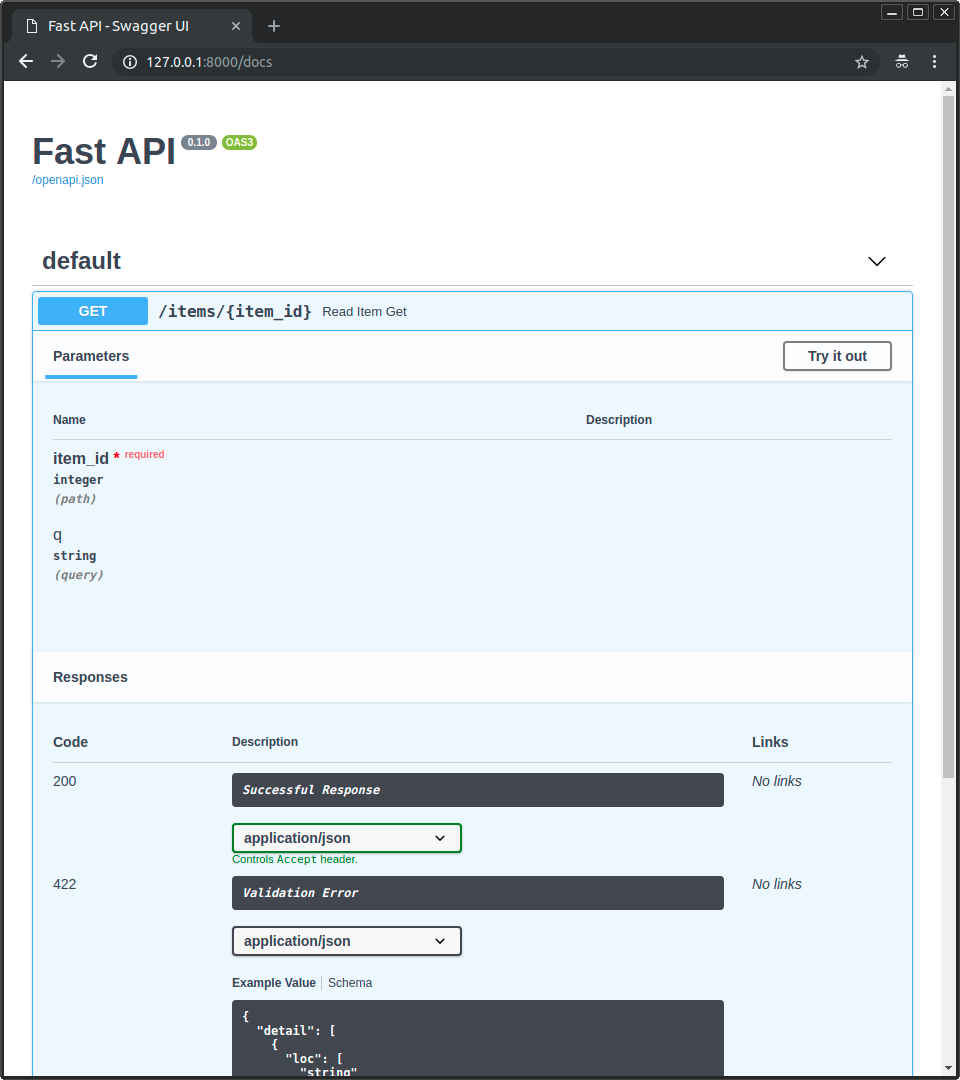

+Now go to http://127.0.0.1:8000/docs.

+

+You will see the automatic interactive API documentation (provided by Swagger UI):

+

+

+

+### Alternative API docs

+

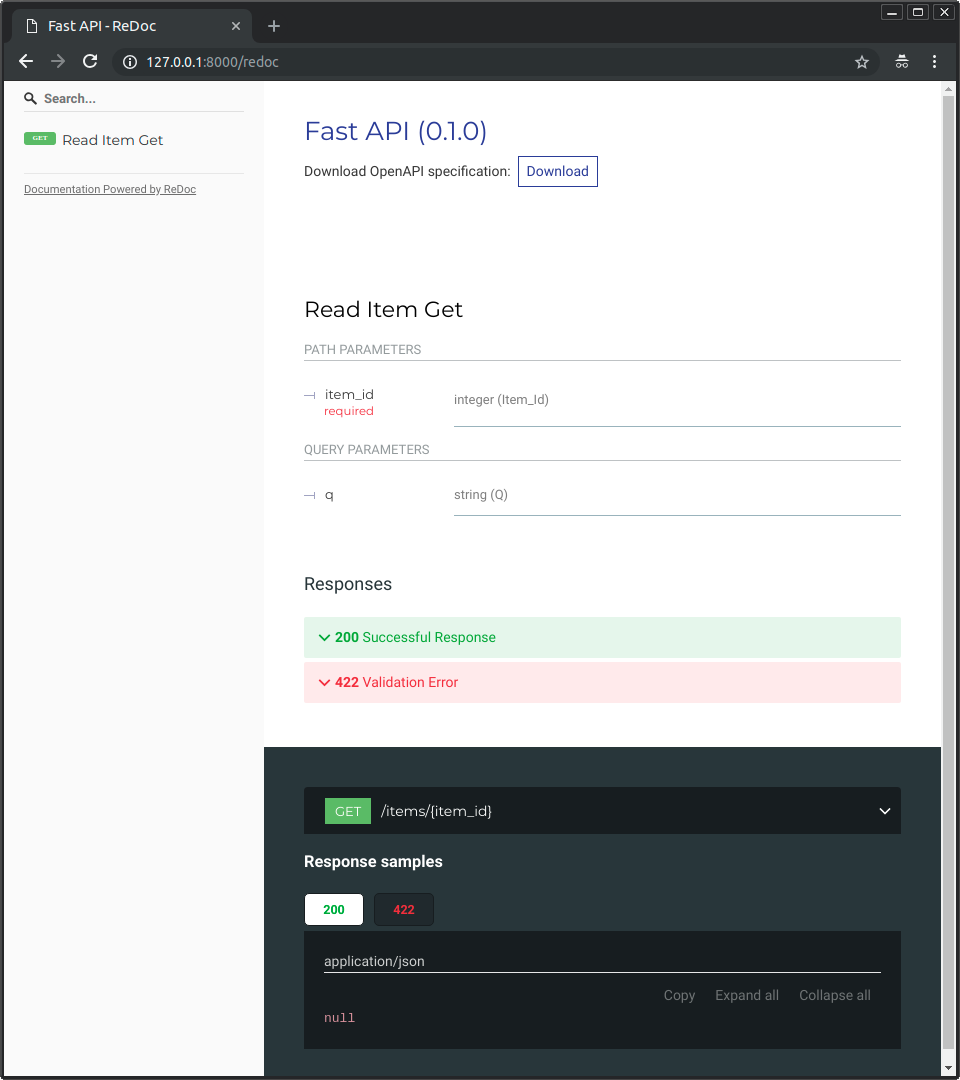

+And now, go to http://127.0.0.1:8000/redoc.

+

+You will see the alternative automatic documentation (provided by ReDoc):

+

+

+

+## Example upgrade

+

+Now modify the file `main.py` to receive a body from a `PUT` request.

+

+Declare the body using standard Python types, thanks to Pydantic.

+

+```Python hl_lines="4 9-12 25-27"

+from typing import Optional

+

+from fastapi import FastAPI

+from pydantic import BaseModel

+

+app = FastAPI()

+

+

+class Item(BaseModel):

+ name: str

+ price: float

+ is_offer: Optional[bool] = None

+

+

+@app.get("/")

+def read_root():

+ return {"Hello": "World"}

+

+

+@app.get("/items/{item_id}")

+def read_item(item_id: int, q: Optional[str] = None):

+ return {"item_id": item_id, "q": q}

+

+

+@app.put("/items/{item_id}")

+def update_item(item_id: int, item: Item):

+ return {"item_name": item.name, "item_id": item_id}

+```

+

+The server should reload automatically (because you added `--reload` to the `uvicorn` command above).

+

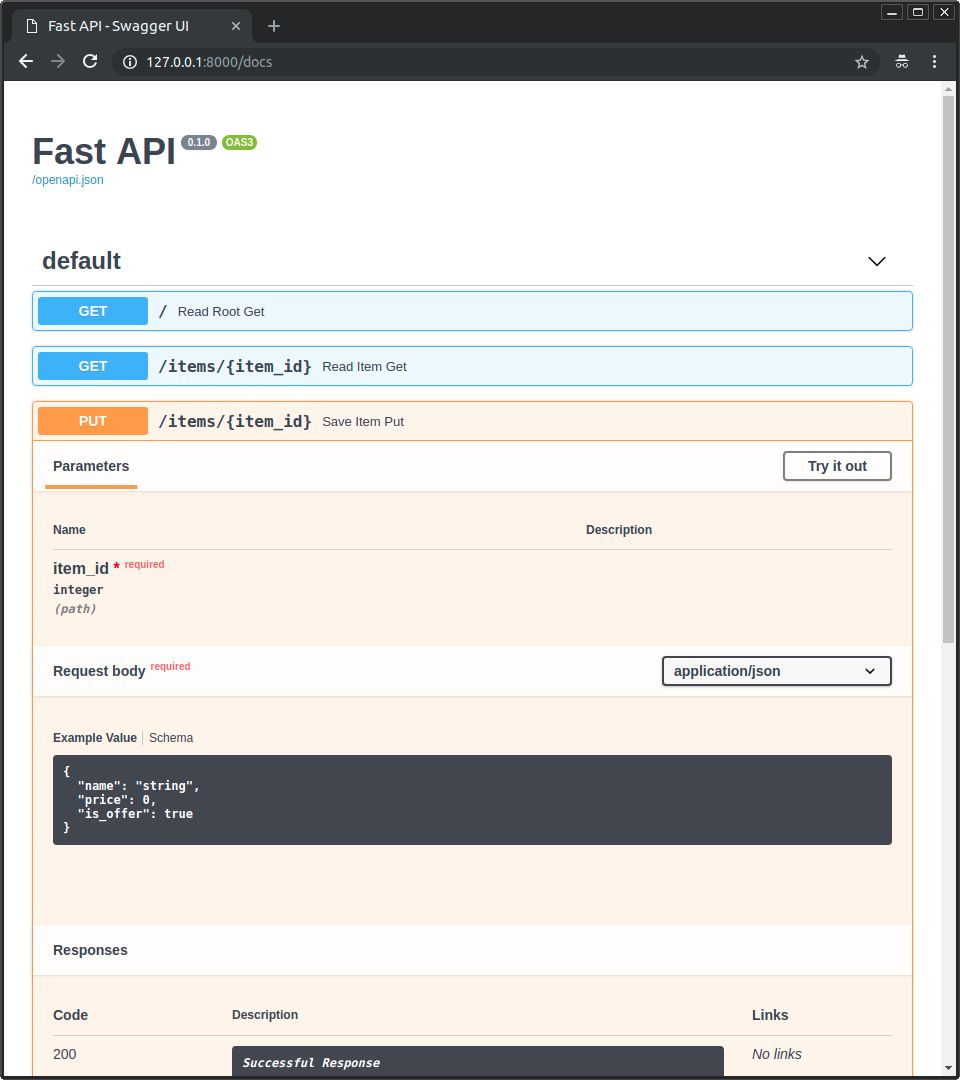

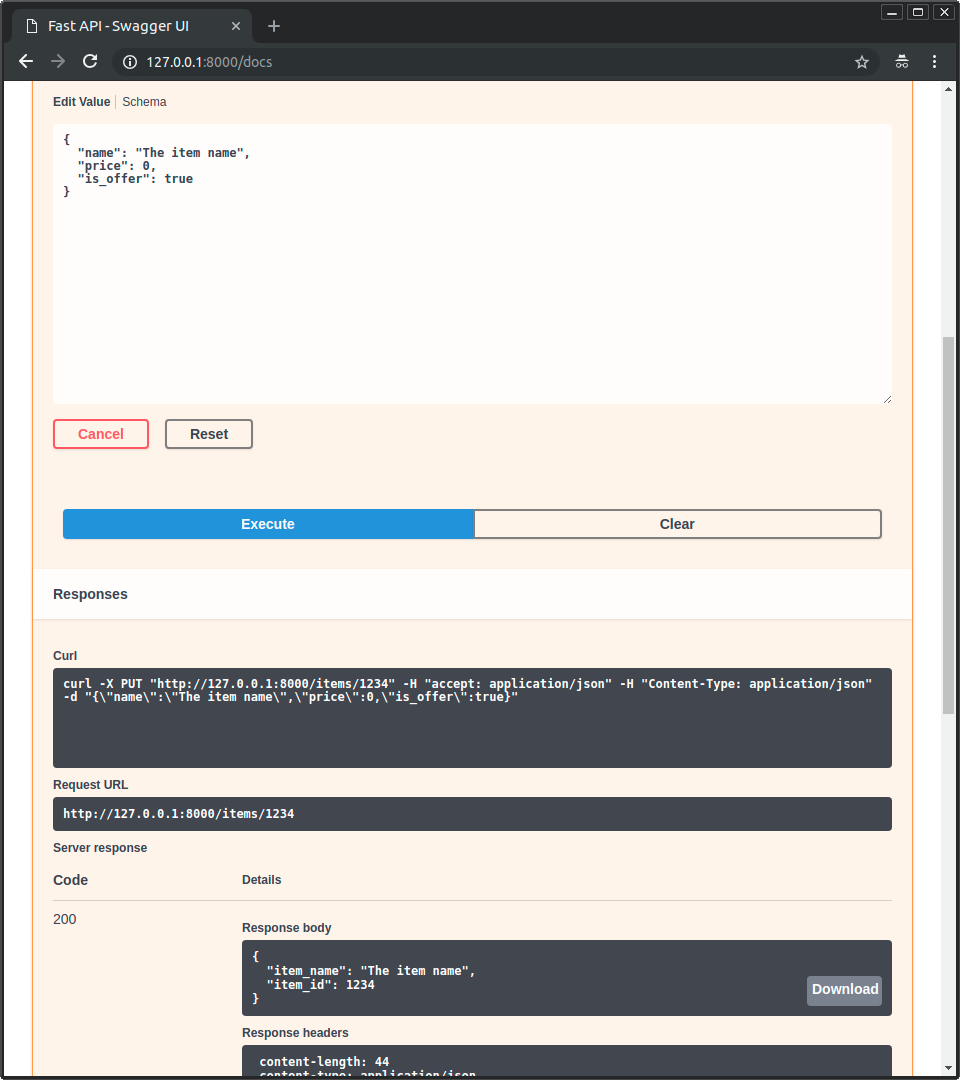

+### Interactive API docs upgrade

+

+Now go to http://127.0.0.1:8000/docs.

+

+* The interactive API documentation will be automatically updated, including the new body:

+

+

+

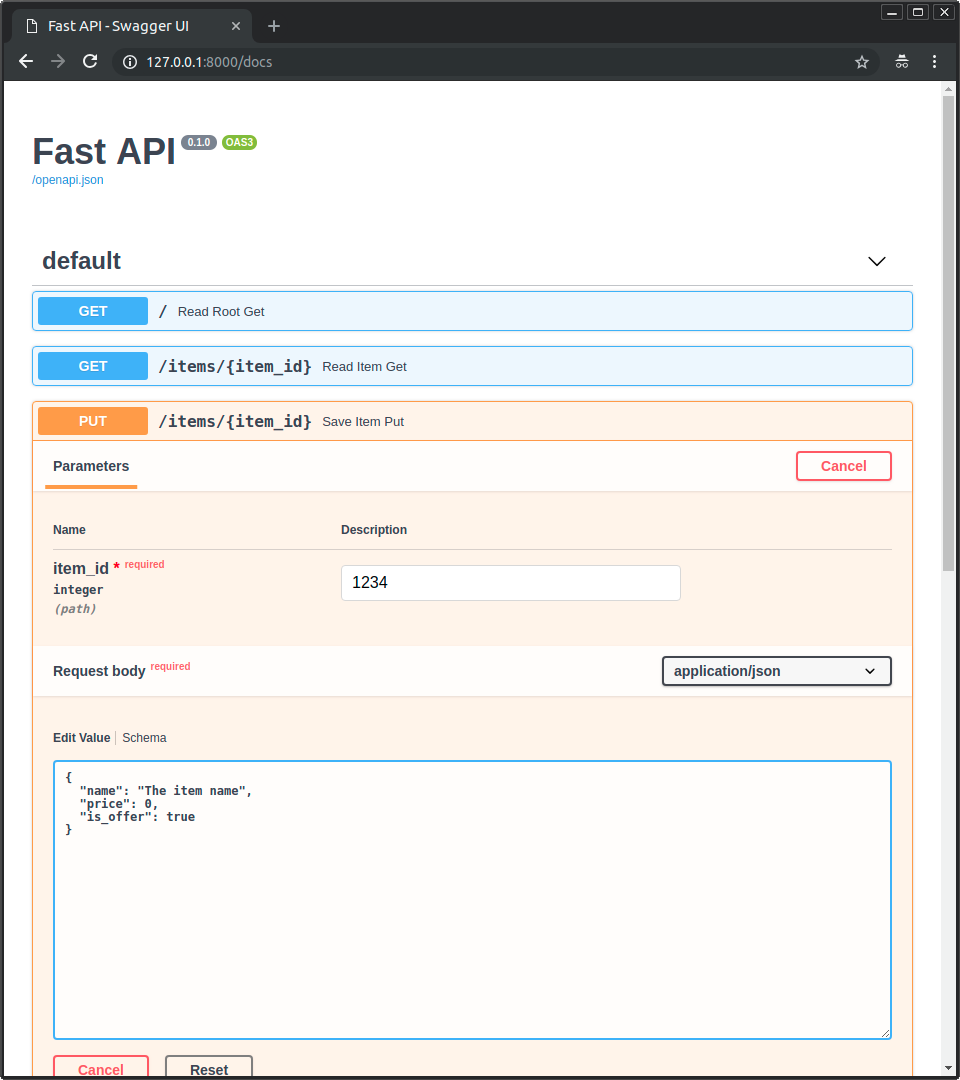

+* Click on the button "Try it out", it allows you to fill the parameters and directly interact with the API:

+

+

+

+* Then click on the "Execute" button, the user interface will communicate with your API, send the parameters, get the results and show them on the screen:

+

+

+

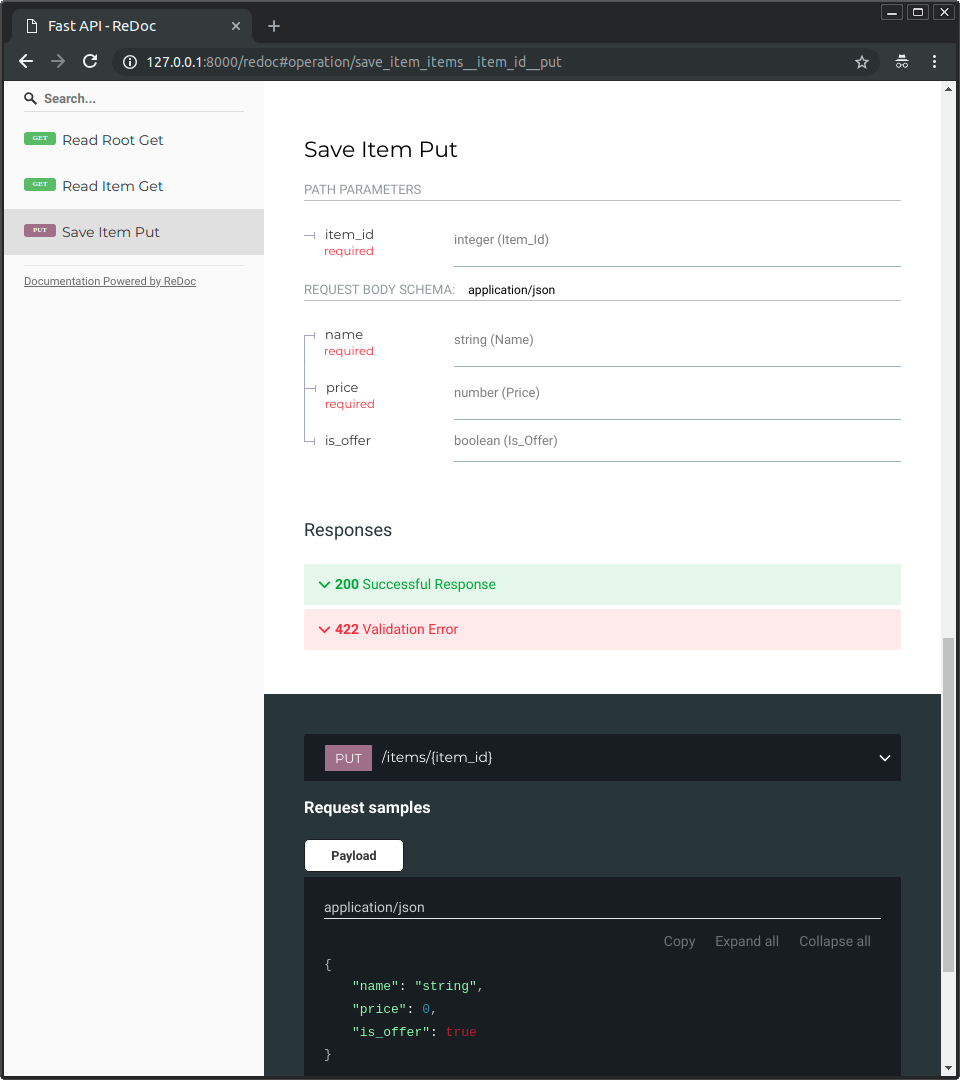

+### Alternative API docs upgrade

+

+And now, go to http://127.0.0.1:8000/redoc.

+

+* The alternative documentation will also reflect the new query parameter and body:

+

+

+

+### Recap

+

+In summary, you declare **once** the types of parameters, body, etc. as function parameters.

+

+You do that with standard modern Python types.

+

+You don't have to learn a new syntax, the methods or classes of a specific library, etc.

+

+Just standard **Python 3.6+**.

+

+For example, for an `int`:

+

+```Python

+item_id: int

+```

+

+or for a more complex `Item` model:

+

+```Python

+item: Item

+```

+

+...and with that single declaration you get:

+

+* Editor support, including:

+ * Completion.

+ * Type checks.

+* Validation of data:

+ * Automatic and clear errors when the data is invalid.

+ * Validation even for deeply nested JSON objects.

+* Conversion of input data: coming from the network to Python data and types. Reading from:

+ * JSON.

+ * Path parameters.

+ * Query parameters.

+ * Cookies.

+ * Headers.

+ * Forms.

+ * Files.

+* Conversion of output data: converting from Python data and types to network data (as JSON):

+ * Convert Python types (`str`, `int`, `float`, `bool`, `list`, etc).

+ * `datetime` objects.

+ * `UUID` objects.

+ * Database models.

+ * ...and many more.

+* Automatic interactive API documentation, including 2 alternative user interfaces:

+ * Swagger UI.

+ * ReDoc.

+

+---

+

+Coming back to the previous code example, **FastAPI** will:

+

+* Validate that there is an `item_id` in the path for `GET` and `PUT` requests.

+* Validate that the `item_id` is of type `int` for `GET` and `PUT` requests.

+ * If it is not, the client will see a useful, clear error.

+* Check if there is an optional query parameter named `q` (as in `http://127.0.0.1:8000/items/foo?q=somequery`) for `GET` requests.

+ * As the `q` parameter is declared with `= None`, it is optional.

+ * Without the `None` it would be required (as is the body in the case with `PUT`).

+* For `PUT` requests to `/items/{item_id}`, Read the body as JSON:

+ * Check that it has a required attribute `name` that should be a `str`.

+ * Check that it has a required attribute `price` that has to be a `float`.

+ * Check that it has an optional attribute `is_offer`, that should be a `bool`, if present.

+ * All this would also work for deeply nested JSON objects.

+* Convert from and to JSON automatically.

+* Document everything with OpenAPI, that can be used by:

+ * Interactive documentation systems.

+ * Automatic client code generation systems, for many languages.

+* Provide 2 interactive documentation web interfaces directly.

+

+---

+

+We just scratched the surface, but you already get the idea of how it all works.

+

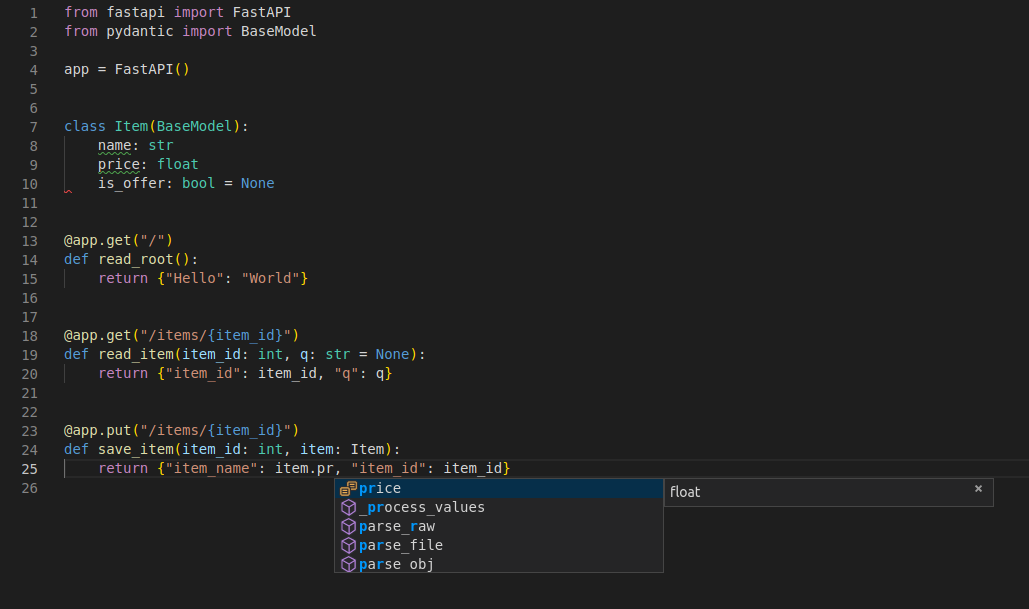

+Try changing the line with:

+

+```Python

+ return {"item_name": item.name, "item_id": item_id}

+```

+

+...from:

+

+```Python

+ ... "item_name": item.name ...

+```

+

+...to:

+

+```Python

+ ... "item_price": item.price ...

+```

+

+...and see how your editor will auto-complete the attributes and know their types:

+

+

+

+For a more complete example including more features, see the Tutorial - User Guide.

+

+**Spoiler alert**: the tutorial - user guide includes:

+

+* Declaration of **parameters** from other different places as: **headers**, **cookies**, **form fields** and **files**.

+* How to set **validation constraints** as `maximum_length` or `regex`.

+* A very powerful and easy to use **Dependency Injection** system.

+* Security and authentication, including support for **OAuth2** with **JWT tokens** and **HTTP Basic** auth.

+* More advanced (but equally easy) techniques for declaring **deeply nested JSON models** (thanks to Pydantic).

+* Many extra features (thanks to Starlette) as:

+ * **WebSockets**

+ * **GraphQL**

+ * extremely easy tests based on `requests` and `pytest`

+ * **CORS**

+ * **Cookie Sessions**

+ * ...and more.

+

+## Performance

+

+Independent TechEmpower benchmarks show **FastAPI** applications running under Uvicorn as one of the fastest Python frameworks available, only below Starlette and Uvicorn themselves (used internally by FastAPI). (*)

+

+To understand more about it, see the section Benchmarks.

+

+## Optional Dependencies

+

+Used by Pydantic:

+

+* ujson - for faster JSON "parsing".

+* email_validator - for email validation.

+

+Used by Starlette:

+

+* requests - Required if you want to use the `TestClient`.

+* aiofiles - Required if you want to use `FileResponse` or `StaticFiles`.

+* jinja2 - Required if you want to use the default template configuration.

+* python-multipart - Required if you want to support form "parsing", with `request.form()`.

+* itsdangerous - Required for `SessionMiddleware` support.

+* pyyaml - Required for Starlette's `SchemaGenerator` support (you probably don't need it with FastAPI).

+* graphene - Required for `GraphQLApp` support.

+* ujson - Required if you want to use `UJSONResponse`.

+

+Used by FastAPI / Starlette:

+

+* uvicorn - for the server that loads and serves your application.

+* orjson - Required if you want to use `ORJSONResponse`.

+

+You can install all of these with `pip install fastapi[all]`.

+

+## License

+

+This project is licensed under the terms of the MIT license.

+

diff --git a/.venv/lib/python3.9/site-packages/fastapi-0.63.0.dist-info/RECORD b/.venv/lib/python3.9/site-packages/fastapi-0.63.0.dist-info/RECORD

new file mode 100644

index 0000000..afc6d20

--- /dev/null

+++ b/.venv/lib/python3.9/site-packages/fastapi-0.63.0.dist-info/RECORD

@@ -0,0 +1,89 @@

+fastapi-0.63.0.dist-info/INSTALLER,sha256=zuuue4knoyJ-UwPPXg8fezS7VCrXJQrAP7zeNuwvFQg,4

+fastapi-0.63.0.dist-info/LICENSE,sha256=Tsif_IFIW5f-xYSy1KlhAy7v_oNEU4lP2cEnSQbMdE4,1086

+fastapi-0.63.0.dist-info/METADATA,sha256=RcIwSNhMxnNo_JBlnZes3m5PCAvJkAQ_IMlcYl0O4rU,22908

+fastapi-0.63.0.dist-info/RECORD,,

+fastapi-0.63.0.dist-info/REQUESTED,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

+fastapi-0.63.0.dist-info/WHEEL,sha256=CqyTrkghQBNsEzLD3HbCSEIJ_fY58-XpoU29dUzwHSk,81

+fastapi/__init__.py,sha256=IYUOJjO5_dMSTt-v0rEmKTl-YzoF5W-XA1okAP6-0XA,1015

+fastapi/__pycache__/__init__.cpython-39.pyc,,

+fastapi/__pycache__/applications.cpython-39.pyc,,

+fastapi/__pycache__/background.cpython-39.pyc,,

+fastapi/__pycache__/concurrency.cpython-39.pyc,,

+fastapi/__pycache__/datastructures.cpython-39.pyc,,

+fastapi/__pycache__/encoders.cpython-39.pyc,,

+fastapi/__pycache__/exception_handlers.cpython-39.pyc,,

+fastapi/__pycache__/exceptions.cpython-39.pyc,,

+fastapi/__pycache__/logger.cpython-39.pyc,,

+fastapi/__pycache__/param_functions.cpython-39.pyc,,

+fastapi/__pycache__/params.cpython-39.pyc,,

+fastapi/__pycache__/requests.cpython-39.pyc,,

+fastapi/__pycache__/responses.cpython-39.pyc,,

+fastapi/__pycache__/routing.cpython-39.pyc,,

+fastapi/__pycache__/staticfiles.cpython-39.pyc,,

+fastapi/__pycache__/templating.cpython-39.pyc,,

+fastapi/__pycache__/testclient.cpython-39.pyc,,

+fastapi/__pycache__/types.cpython-39.pyc,,

+fastapi/__pycache__/utils.cpython-39.pyc,,

+fastapi/__pycache__/websockets.cpython-39.pyc,,

+fastapi/applications.py,sha256=D24FMrLOxB7WeZxMaH14YWEg3FnB2Uw8HVcWst1b2CY,31603

+fastapi/background.py,sha256=HtN5_pJJrOdalSbuGSMKJAPNWUU5h7rY_BXXubu7-IQ,76

+fastapi/concurrency.py,sha256=2WhXMOKbv-BDmgorXCdwqmKfMGJekOMCb2x3WagOf6I,1720

+fastapi/datastructures.py,sha256=n_yD3ybdtdwB3cQTCie61RAjYRgxAsIZbwHRDy8Hkgk,1389

+fastapi/dependencies/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

+fastapi/dependencies/__pycache__/__init__.cpython-39.pyc,,

+fastapi/dependencies/__pycache__/models.cpython-39.pyc,,

+fastapi/dependencies/__pycache__/utils.cpython-39.pyc,,

+fastapi/dependencies/models.py,sha256=zNbioxICuOeb-9ADDVQ45hUHOC0PBtPVEfVU3f1l_nc,2494

+fastapi/dependencies/utils.py,sha256=6VkX0HiaG-j2zszpe-QFPY9ZH0zh54IxTArLxu3CJ6c,28831

+fastapi/encoders.py,sha256=o4o-qUlgCY1Tmm8QFeuNzvbBVpf0jzJanYMMUaVE8xA,5268

+fastapi/exception_handlers.py,sha256=UVYCCe4qt5-5_NuQ3SxTXjDvOdKMHiTfcLp3RUKXhg8,912

+fastapi/exceptions.py,sha256=KDnOOHp1EQ_Pz4XG9nHKYbf7AcagVwhsa6s72w6IRsQ,1080

+fastapi/logger.py,sha256=I9NNi3ov8AcqbsbC9wl1X-hdItKgYt2XTrx1f99Zpl4,54

+fastapi/middleware/__init__.py,sha256=oQDxiFVcc1fYJUOIFvphnK7pTT5kktmfL32QXpBFvvo,58

+fastapi/middleware/__pycache__/__init__.cpython-39.pyc,,

+fastapi/middleware/__pycache__/cors.cpython-39.pyc,,

+fastapi/middleware/__pycache__/gzip.cpython-39.pyc,,

+fastapi/middleware/__pycache__/httpsredirect.cpython-39.pyc,,

+fastapi/middleware/__pycache__/trustedhost.cpython-39.pyc,,

+fastapi/middleware/__pycache__/wsgi.cpython-39.pyc,,

+fastapi/middleware/cors.py,sha256=ynwjWQZoc_vbhzZ3_ZXceoaSrslHFHPdoM52rXr0WUU,79

+fastapi/middleware/gzip.py,sha256=xM5PcsH8QlAimZw4VDvcmTnqQamslThsfe3CVN2voa0,79

+fastapi/middleware/httpsredirect.py,sha256=rL8eXMnmLijwVkH7_400zHri1AekfeBd6D6qs8ix950,115

+fastapi/middleware/trustedhost.py,sha256=eE5XGRxGa7c5zPnMJDGp3BxaL25k5iVQlhnv-Pk0Pss,109

+fastapi/middleware/wsgi.py,sha256=Z3Ue-7wni4lUZMvH3G9ek__acgYdJstbnpZX_HQAboY,79

+fastapi/openapi/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

+fastapi/openapi/__pycache__/__init__.cpython-39.pyc,,

+fastapi/openapi/__pycache__/constants.cpython-39.pyc,,

+fastapi/openapi/__pycache__/docs.cpython-39.pyc,,

+fastapi/openapi/__pycache__/models.cpython-39.pyc,,

+fastapi/openapi/__pycache__/utils.cpython-39.pyc,,

+fastapi/openapi/constants.py,sha256=sJSpZzRp7Kky9R-jucU-K6_pJzLBRO75ddW7-MixZWc,166

+fastapi/openapi/docs.py,sha256=XyDQ4t2Ca95ZN_sSfwjCP3DcwM5Rv21FrwqTfk4x_H4,5538

+fastapi/openapi/models.py,sha256=xv8t-7w2cYFbXr9HtgX3NDxxMDyZ1Dkg5-1XZ3kDX8E,10439

+fastapi/openapi/utils.py,sha256=M51USLtZWu6sVQmLdkKsrodztBZkAxnqdilNMxcrqio,15448

+fastapi/param_functions.py,sha256=Kd3SoRYG0q7C52_Qm5HJ4xpE8nU3jt58b72naJjQTN4,6118

+fastapi/params.py,sha256=t5MXQR1GyH0F9ymjnMp6lr7dFIHnXRzau9dn7PiRVGc,8832

+fastapi/py.typed,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

+fastapi/requests.py,sha256=zayepKFcienBllv3snmWI20Gk0oHNVLU4DDhqXBb4LU,142

+fastapi/responses.py,sha256=I5-0Ao6AwBtIJo3BEJK4vBiYXGs73ZaD8IlolQAcJAI,936

+fastapi/routing.py,sha256=0zbGl-luaLIVeNrW7nZrYlAN7VAfYf1xCrt0dr9f8x0,46856

+fastapi/security/__init__.py,sha256=bO8pNmxqVRXUjfl2mOKiVZLn0FpBQ61VUYVjmppnbJw,881

+fastapi/security/__pycache__/__init__.cpython-39.pyc,,

+fastapi/security/__pycache__/api_key.cpython-39.pyc,,

+fastapi/security/__pycache__/base.cpython-39.pyc,,

+fastapi/security/__pycache__/http.cpython-39.pyc,,

+fastapi/security/__pycache__/oauth2.cpython-39.pyc,,

+fastapi/security/__pycache__/open_id_connect_url.cpython-39.pyc,,

+fastapi/security/__pycache__/utils.cpython-39.pyc,,

+fastapi/security/api_key.py,sha256=WdgOMNWoFbEuQeteeEbifDgjhDEdXj523FE5nEqAI8k,2427

+fastapi/security/base.py,sha256=dl4pvbC-RxjfbWgPtCWd8MVU-7CB2SZ22rJDXVCXO6c,141

+fastapi/security/http.py,sha256=jPDWs2V1pKeXTtCQjhXAG_ZnwTqaX_wq7Mun6uFDl30,5640

+fastapi/security/oauth2.py,sha256=w14ZLUfUWSmHTZnDnynQKMvfXvlYtoswnJ_90yVV6kM,7861

+fastapi/security/open_id_connect_url.py,sha256=vLlY8Ek6H3_QsA4mc_UhSJ8UTp9uMOYxYKn5DD7RmJc,1055

+fastapi/security/utils.py,sha256=izlh-HBaL1VnJeOeRTQnyNgI3hgTFs73eCyLy-snb4A,266

+fastapi/staticfiles.py,sha256=iirGIt3sdY2QZXd36ijs3Cj-T0FuGFda3cd90kM9Ikw,69

+fastapi/templating.py,sha256=4zsuTWgcjcEainMJFAlW6-gnslm6AgOS1SiiDWfmQxk,76

+fastapi/testclient.py,sha256=nBvaAmX66YldReJNZXPOk1sfuo2Q6hs8bOvIaCep6LQ,66

+fastapi/types.py,sha256=r6MngTHzkZOP9lzXgduje9yeZe5EInWAzCLuRJlhIuE,118

+fastapi/utils.py,sha256=g_H9Owy8vbUgY_L4tfYBJRdX9ofIqKPXkhh0LTRLRYE,5545

+fastapi/websockets.py,sha256=SroIkqE-lfChvtRP3mFaNKKtD6TmePDWBZtQfgM4noo,148

diff --git a/.venv/lib/python3.9/site-packages/fastapi-0.63.0.dist-info/REQUESTED b/.venv/lib/python3.9/site-packages/fastapi-0.63.0.dist-info/REQUESTED

new file mode 100644

index 0000000..e69de29

diff --git a/.venv/lib/python3.9/site-packages/fastapi-0.63.0.dist-info/WHEEL b/.venv/lib/python3.9/site-packages/fastapi-0.63.0.dist-info/WHEEL

new file mode 100644

index 0000000..b767d46

--- /dev/null

+++ b/.venv/lib/python3.9/site-packages/fastapi-0.63.0.dist-info/WHEEL

@@ -0,0 +1,4 @@

+Wheel-Version: 1.0

+Generator: flit 3.0.0

+Root-Is-Purelib: true

+Tag: py3-none-any

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__init__.py b/.venv/lib/python3.9/site-packages/fastapi/__init__.py

new file mode 100644

index 0000000..c0bb0e4

--- /dev/null

+++ b/.venv/lib/python3.9/site-packages/fastapi/__init__.py

@@ -0,0 +1,24 @@

+"""FastAPI framework, high performance, easy to learn, fast to code, ready for production"""

+

+__version__ = "0.63.0"

+

+from starlette import status as status

+

+from .applications import FastAPI as FastAPI

+from .background import BackgroundTasks as BackgroundTasks

+from .datastructures import UploadFile as UploadFile

+from .exceptions import HTTPException as HTTPException

+from .param_functions import Body as Body

+from .param_functions import Cookie as Cookie

+from .param_functions import Depends as Depends

+from .param_functions import File as File

+from .param_functions import Form as Form

+from .param_functions import Header as Header

+from .param_functions import Path as Path

+from .param_functions import Query as Query

+from .param_functions import Security as Security

+from .requests import Request as Request

+from .responses import Response as Response

+from .routing import APIRouter as APIRouter

+from .websockets import WebSocket as WebSocket

+from .websockets import WebSocketDisconnect as WebSocketDisconnect

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/__init__.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/__init__.cpython-39.pyc

new file mode 100644

index 0000000..5cbd4ab

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/__init__.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/applications.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/applications.cpython-39.pyc

new file mode 100644

index 0000000..1962550

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/applications.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/background.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/background.cpython-39.pyc

new file mode 100644

index 0000000..be06e5e

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/background.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/concurrency.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/concurrency.cpython-39.pyc

new file mode 100644

index 0000000..3052cd2

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/concurrency.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/datastructures.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/datastructures.cpython-39.pyc

new file mode 100644

index 0000000..63c54cc

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/datastructures.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/encoders.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/encoders.cpython-39.pyc

new file mode 100644

index 0000000..f70a635

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/encoders.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/exception_handlers.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/exception_handlers.cpython-39.pyc

new file mode 100644

index 0000000..df2e56b

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/exception_handlers.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/exceptions.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/exceptions.cpython-39.pyc

new file mode 100644

index 0000000..a9d70a5

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/exceptions.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/logger.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/logger.cpython-39.pyc

new file mode 100644

index 0000000..6984aaf

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/logger.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/param_functions.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/param_functions.cpython-39.pyc

new file mode 100644

index 0000000..23a3dc9

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/param_functions.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/params.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/params.cpython-39.pyc

new file mode 100644

index 0000000..8d73dc7

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/params.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/requests.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/requests.cpython-39.pyc

new file mode 100644

index 0000000..c9f16c9

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/requests.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/responses.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/responses.cpython-39.pyc

new file mode 100644

index 0000000..bbb60d2

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/responses.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/routing.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/routing.cpython-39.pyc

new file mode 100644

index 0000000..383b341

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/routing.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/staticfiles.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/staticfiles.cpython-39.pyc

new file mode 100644

index 0000000..5841a50

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/staticfiles.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/templating.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/templating.cpython-39.pyc

new file mode 100644

index 0000000..698c169

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/templating.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/testclient.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/testclient.cpython-39.pyc

new file mode 100644

index 0000000..4888739

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/testclient.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/types.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/types.cpython-39.pyc

new file mode 100644

index 0000000..19f71e8

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/types.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/utils.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/utils.cpython-39.pyc

new file mode 100644

index 0000000..ee54a25

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/utils.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/__pycache__/websockets.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/websockets.cpython-39.pyc

new file mode 100644

index 0000000..dce86bc

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/__pycache__/websockets.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/applications.py b/.venv/lib/python3.9/site-packages/fastapi/applications.py

new file mode 100644

index 0000000..92d041c

--- /dev/null

+++ b/.venv/lib/python3.9/site-packages/fastapi/applications.py

@@ -0,0 +1,739 @@

+from typing import Any, Callable, Coroutine, Dict, List, Optional, Sequence, Type, Union

+

+from fastapi import routing

+from fastapi.concurrency import AsyncExitStack

+from fastapi.datastructures import Default, DefaultPlaceholder

+from fastapi.encoders import DictIntStrAny, SetIntStr

+from fastapi.exception_handlers import (

+ http_exception_handler,

+ request_validation_exception_handler,

+)

+from fastapi.exceptions import RequestValidationError

+from fastapi.logger import logger

+from fastapi.openapi.docs import (

+ get_redoc_html,

+ get_swagger_ui_html,

+ get_swagger_ui_oauth2_redirect_html,

+)

+from fastapi.openapi.utils import get_openapi

+from fastapi.params import Depends

+from fastapi.types import DecoratedCallable

+from starlette.applications import Starlette

+from starlette.datastructures import State

+from starlette.exceptions import HTTPException

+from starlette.middleware import Middleware

+from starlette.requests import Request

+from starlette.responses import HTMLResponse, JSONResponse, Response

+from starlette.routing import BaseRoute

+from starlette.types import ASGIApp, Receive, Scope, Send

+

+

+class FastAPI(Starlette):

+ def __init__(

+ self,

+ *,

+ debug: bool = False,

+ routes: Optional[List[BaseRoute]] = None,

+ title: str = "FastAPI",

+ description: str = "",

+ version: str = "0.1.0",

+ openapi_url: Optional[str] = "/openapi.json",

+ openapi_tags: Optional[List[Dict[str, Any]]] = None,

+ servers: Optional[List[Dict[str, Union[str, Any]]]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ default_response_class: Type[Response] = Default(JSONResponse),

+ docs_url: Optional[str] = "/docs",

+ redoc_url: Optional[str] = "/redoc",

+ swagger_ui_oauth2_redirect_url: Optional[str] = "/docs/oauth2-redirect",

+ swagger_ui_init_oauth: Optional[Dict[str, Any]] = None,

+ middleware: Optional[Sequence[Middleware]] = None,

+ exception_handlers: Optional[

+ Dict[

+ Union[int, Type[Exception]],

+ Callable[[Request, Any], Coroutine[Any, Any, Response]],

+ ]

+ ] = None,

+ on_startup: Optional[Sequence[Callable[[], Any]]] = None,

+ on_shutdown: Optional[Sequence[Callable[[], Any]]] = None,

+ openapi_prefix: str = "",

+ root_path: str = "",

+ root_path_in_servers: bool = True,

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ callbacks: Optional[List[BaseRoute]] = None,

+ deprecated: Optional[bool] = None,

+ include_in_schema: bool = True,

+ **extra: Any,

+ ) -> None:

+ self._debug: bool = debug

+ self.state: State = State()

+ self.router: routing.APIRouter = routing.APIRouter(

+ routes=routes,

+ dependency_overrides_provider=self,

+ on_startup=on_startup,

+ on_shutdown=on_shutdown,

+ default_response_class=default_response_class,

+ dependencies=dependencies,

+ callbacks=callbacks,

+ deprecated=deprecated,

+ include_in_schema=include_in_schema,

+ responses=responses,

+ )

+ self.exception_handlers: Dict[

+ Union[int, Type[Exception]],

+ Callable[[Request, Any], Coroutine[Any, Any, Response]],

+ ] = (

+ {} if exception_handlers is None else dict(exception_handlers)

+ )

+ self.exception_handlers.setdefault(HTTPException, http_exception_handler)

+ self.exception_handlers.setdefault(

+ RequestValidationError, request_validation_exception_handler

+ )

+

+ self.user_middleware: List[Middleware] = (

+ [] if middleware is None else list(middleware)

+ )

+ self.middleware_stack: ASGIApp = self.build_middleware_stack()

+

+ self.title = title

+ self.description = description

+ self.version = version

+ self.servers = servers or []

+ self.openapi_url = openapi_url

+ self.openapi_tags = openapi_tags

+ # TODO: remove when discarding the openapi_prefix parameter

+ if openapi_prefix:

+ logger.warning(

+ '"openapi_prefix" has been deprecated in favor of "root_path", which '

+ "follows more closely the ASGI standard, is simpler, and more "

+ "automatic. Check the docs at "

+ "https://fastapi.tiangolo.com/advanced/sub-applications/"

+ )

+ self.root_path = root_path or openapi_prefix

+ self.root_path_in_servers = root_path_in_servers

+ self.docs_url = docs_url

+ self.redoc_url = redoc_url

+ self.swagger_ui_oauth2_redirect_url = swagger_ui_oauth2_redirect_url

+ self.swagger_ui_init_oauth = swagger_ui_init_oauth

+ self.extra = extra

+ self.dependency_overrides: Dict[Callable[..., Any], Callable[..., Any]] = {}

+

+ self.openapi_version = "3.0.2"

+

+ if self.openapi_url:

+ assert self.title, "A title must be provided for OpenAPI, e.g.: 'My API'"

+ assert self.version, "A version must be provided for OpenAPI, e.g.: '2.1.0'"

+ self.openapi_schema: Optional[Dict[str, Any]] = None

+ self.setup()

+

+ def openapi(self) -> Dict[str, Any]:

+ if not self.openapi_schema:

+ self.openapi_schema = get_openapi(

+ title=self.title,

+ version=self.version,

+ openapi_version=self.openapi_version,

+ description=self.description,

+ routes=self.routes,

+ tags=self.openapi_tags,

+ servers=self.servers,

+ )

+ return self.openapi_schema

+

+ def setup(self) -> None:

+ if self.openapi_url:

+ urls = (server_data.get("url") for server_data in self.servers)

+ server_urls = {url for url in urls if url}

+

+ async def openapi(req: Request) -> JSONResponse:

+ root_path = req.scope.get("root_path", "").rstrip("/")

+ if root_path not in server_urls:

+ if root_path and self.root_path_in_servers:

+ self.servers.insert(0, {"url": root_path})

+ server_urls.add(root_path)

+ return JSONResponse(self.openapi())

+

+ self.add_route(self.openapi_url, openapi, include_in_schema=False)

+ if self.openapi_url and self.docs_url:

+

+ async def swagger_ui_html(req: Request) -> HTMLResponse:

+ root_path = req.scope.get("root_path", "").rstrip("/")

+ openapi_url = root_path + self.openapi_url

+ oauth2_redirect_url = self.swagger_ui_oauth2_redirect_url

+ if oauth2_redirect_url:

+ oauth2_redirect_url = root_path + oauth2_redirect_url

+ return get_swagger_ui_html(

+ openapi_url=openapi_url,

+ title=self.title + " - Swagger UI",

+ oauth2_redirect_url=oauth2_redirect_url,

+ init_oauth=self.swagger_ui_init_oauth,

+ )

+

+ self.add_route(self.docs_url, swagger_ui_html, include_in_schema=False)

+

+ if self.swagger_ui_oauth2_redirect_url:

+

+ async def swagger_ui_redirect(req: Request) -> HTMLResponse:

+ return get_swagger_ui_oauth2_redirect_html()

+

+ self.add_route(

+ self.swagger_ui_oauth2_redirect_url,

+ swagger_ui_redirect,

+ include_in_schema=False,

+ )

+ if self.openapi_url and self.redoc_url:

+

+ async def redoc_html(req: Request) -> HTMLResponse:

+ root_path = req.scope.get("root_path", "").rstrip("/")

+ openapi_url = root_path + self.openapi_url

+ return get_redoc_html(

+ openapi_url=openapi_url, title=self.title + " - ReDoc"

+ )

+

+ self.add_route(self.redoc_url, redoc_html, include_in_schema=False)

+

+ async def __call__(self, scope: Scope, receive: Receive, send: Send) -> None:

+ if self.root_path:

+ scope["root_path"] = self.root_path

+ if AsyncExitStack:

+ async with AsyncExitStack() as stack:

+ scope["fastapi_astack"] = stack

+ await super().__call__(scope, receive, send)

+ else:

+ await super().__call__(scope, receive, send) # pragma: no cover

+

+ def add_api_route(

+ self,

+ path: str,

+ endpoint: Callable[..., Coroutine[Any, Any, Response]],

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ methods: Optional[List[str]] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Union[Type[Response], DefaultPlaceholder] = Default(

+ JSONResponse

+ ),

+ name: Optional[str] = None,

+ ) -> None:

+ self.router.add_api_route(

+ path,

+ endpoint=endpoint,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ methods=methods,

+ operation_id=operation_id,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ )

+

+ def api_route(

+ self,

+ path: str,

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ methods: Optional[List[str]] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Type[Response] = Default(JSONResponse),

+ name: Optional[str] = None,

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ def decorator(func: DecoratedCallable) -> DecoratedCallable:

+ self.router.add_api_route(

+ path,

+ func,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ methods=methods,

+ operation_id=operation_id,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ )

+ return func

+

+ return decorator

+

+ def add_api_websocket_route(

+ self, path: str, endpoint: Callable[..., Any], name: Optional[str] = None

+ ) -> None:

+ self.router.add_api_websocket_route(path, endpoint, name=name)

+

+ def websocket(

+ self, path: str, name: Optional[str] = None

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ def decorator(func: DecoratedCallable) -> DecoratedCallable:

+ self.add_api_websocket_route(path, func, name=name)

+ return func

+

+ return decorator

+

+ def include_router(

+ self,

+ router: routing.APIRouter,

+ *,

+ prefix: str = "",

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ include_in_schema: bool = True,

+ default_response_class: Type[Response] = Default(JSONResponse),

+ callbacks: Optional[List[BaseRoute]] = None,

+ ) -> None:

+ self.router.include_router(

+ router,

+ prefix=prefix,

+ tags=tags,

+ dependencies=dependencies,

+ responses=responses,

+ deprecated=deprecated,

+ include_in_schema=include_in_schema,

+ default_response_class=default_response_class,

+ callbacks=callbacks,

+ )

+

+ def get(

+ self,

+ path: str,

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Type[Response] = Default(JSONResponse),

+ name: Optional[str] = None,

+ callbacks: Optional[List[BaseRoute]] = None,

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ return self.router.get(

+ path,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ operation_id=operation_id,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ callbacks=callbacks,

+ )

+

+ def put(

+ self,

+ path: str,

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Type[Response] = Default(JSONResponse),

+ name: Optional[str] = None,

+ callbacks: Optional[List[BaseRoute]] = None,

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ return self.router.put(

+ path,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ operation_id=operation_id,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ callbacks=callbacks,

+ )

+

+ def post(

+ self,

+ path: str,

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Type[Response] = Default(JSONResponse),

+ name: Optional[str] = None,

+ callbacks: Optional[List[BaseRoute]] = None,

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ return self.router.post(

+ path,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ operation_id=operation_id,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ callbacks=callbacks,

+ )

+

+ def delete(

+ self,

+ path: str,

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Type[Response] = Default(JSONResponse),

+ name: Optional[str] = None,

+ callbacks: Optional[List[BaseRoute]] = None,

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ return self.router.delete(

+ path,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ operation_id=operation_id,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ callbacks=callbacks,

+ )

+

+ def options(

+ self,

+ path: str,

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Type[Response] = Default(JSONResponse),

+ name: Optional[str] = None,

+ callbacks: Optional[List[BaseRoute]] = None,

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ return self.router.options(

+ path,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ operation_id=operation_id,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ callbacks=callbacks,

+ )

+

+ def head(

+ self,

+ path: str,

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Type[Response] = Default(JSONResponse),

+ name: Optional[str] = None,

+ callbacks: Optional[List[BaseRoute]] = None,

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ return self.router.head(

+ path,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ operation_id=operation_id,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ callbacks=callbacks,

+ )

+

+ def patch(

+ self,

+ path: str,

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Type[Response] = Default(JSONResponse),

+ name: Optional[str] = None,

+ callbacks: Optional[List[BaseRoute]] = None,

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ return self.router.patch(

+ path,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ operation_id=operation_id,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ callbacks=callbacks,

+ )

+

+ def trace(

+ self,

+ path: str,

+ *,

+ response_model: Optional[Type[Any]] = None,

+ status_code: int = 200,

+ tags: Optional[List[str]] = None,

+ dependencies: Optional[Sequence[Depends]] = None,

+ summary: Optional[str] = None,

+ description: Optional[str] = None,

+ response_description: str = "Successful Response",

+ responses: Optional[Dict[Union[int, str], Dict[str, Any]]] = None,

+ deprecated: Optional[bool] = None,

+ operation_id: Optional[str] = None,

+ response_model_include: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_exclude: Optional[Union[SetIntStr, DictIntStrAny]] = None,

+ response_model_by_alias: bool = True,

+ response_model_exclude_unset: bool = False,

+ response_model_exclude_defaults: bool = False,

+ response_model_exclude_none: bool = False,

+ include_in_schema: bool = True,

+ response_class: Type[Response] = Default(JSONResponse),

+ name: Optional[str] = None,

+ callbacks: Optional[List[BaseRoute]] = None,

+ ) -> Callable[[DecoratedCallable], DecoratedCallable]:

+ return self.router.trace(

+ path,

+ response_model=response_model,

+ status_code=status_code,

+ tags=tags,

+ dependencies=dependencies,

+ summary=summary,

+ description=description,

+ response_description=response_description,

+ responses=responses,

+ deprecated=deprecated,

+ operation_id=operation_id,

+ response_model_include=response_model_include,

+ response_model_exclude=response_model_exclude,

+ response_model_by_alias=response_model_by_alias,

+ response_model_exclude_unset=response_model_exclude_unset,

+ response_model_exclude_defaults=response_model_exclude_defaults,

+ response_model_exclude_none=response_model_exclude_none,

+ include_in_schema=include_in_schema,

+ response_class=response_class,

+ name=name,

+ callbacks=callbacks,

+ )

diff --git a/.venv/lib/python3.9/site-packages/fastapi/background.py b/.venv/lib/python3.9/site-packages/fastapi/background.py

new file mode 100644

index 0000000..dd3bbe2

--- /dev/null

+++ b/.venv/lib/python3.9/site-packages/fastapi/background.py

@@ -0,0 +1 @@

+from starlette.background import BackgroundTasks as BackgroundTasks # noqa

diff --git a/.venv/lib/python3.9/site-packages/fastapi/concurrency.py b/.venv/lib/python3.9/site-packages/fastapi/concurrency.py

new file mode 100644

index 0000000..d1fdfe5

--- /dev/null

+++ b/.venv/lib/python3.9/site-packages/fastapi/concurrency.py

@@ -0,0 +1,51 @@

+from typing import Any, Callable

+

+from starlette.concurrency import iterate_in_threadpool as iterate_in_threadpool # noqa

+from starlette.concurrency import run_in_threadpool as run_in_threadpool # noqa

+from starlette.concurrency import ( # noqa

+ run_until_first_complete as run_until_first_complete,

+)

+

+asynccontextmanager_error_message = """

+FastAPI's contextmanager_in_threadpool require Python 3.7 or above,

+or the backport for Python 3.6, installed with:

+ pip install async-generator

+"""

+

+

+def _fake_asynccontextmanager(func: Callable[..., Any]) -> Callable[..., Any]:

+ def raiser(*args: Any, **kwargs: Any) -> Any:

+ raise RuntimeError(asynccontextmanager_error_message)

+

+ return raiser

+

+

+try:

+ from contextlib import asynccontextmanager as asynccontextmanager # type: ignore

+except ImportError:

+ try:

+ from async_generator import ( # type: ignore # isort: skip

+ asynccontextmanager as asynccontextmanager,

+ )

+ except ImportError: # pragma: no cover

+ asynccontextmanager = _fake_asynccontextmanager

+

+try:

+ from contextlib import AsyncExitStack as AsyncExitStack # type: ignore

+except ImportError:

+ try:

+ from async_exit_stack import AsyncExitStack as AsyncExitStack # type: ignore

+ except ImportError: # pragma: no cover

+ AsyncExitStack = None # type: ignore

+

+

+@asynccontextmanager # type: ignore

+async def contextmanager_in_threadpool(cm: Any) -> Any:

+ try:

+ yield await run_in_threadpool(cm.__enter__)

+ except Exception as e:

+ ok = await run_in_threadpool(cm.__exit__, type(e), e, None)

+ if not ok:

+ raise e

+ else:

+ await run_in_threadpool(cm.__exit__, None, None, None)

diff --git a/.venv/lib/python3.9/site-packages/fastapi/datastructures.py b/.venv/lib/python3.9/site-packages/fastapi/datastructures.py

new file mode 100644

index 0000000..f22409c

--- /dev/null

+++ b/.venv/lib/python3.9/site-packages/fastapi/datastructures.py

@@ -0,0 +1,47 @@

+from typing import Any, Callable, Iterable, Type, TypeVar

+

+from starlette.datastructures import State as State # noqa: F401

+from starlette.datastructures import UploadFile as StarletteUploadFile

+

+

+class UploadFile(StarletteUploadFile):

+ @classmethod

+ def __get_validators__(cls: Type["UploadFile"]) -> Iterable[Callable[..., Any]]:

+ yield cls.validate

+

+ @classmethod

+ def validate(cls: Type["UploadFile"], v: Any) -> Any:

+ if not isinstance(v, StarletteUploadFile):

+ raise ValueError(f"Expected UploadFile, received: {type(v)}")

+ return v

+

+

+class DefaultPlaceholder:

+ """

+ You shouldn't use this class directly.

+

+ It's used internally to recognize when a default value has been overwritten, even

+ if the overriden default value was truthy.

+ """

+

+ def __init__(self, value: Any):

+ self.value = value

+

+ def __bool__(self) -> bool:

+ return bool(self.value)

+

+ def __eq__(self, o: object) -> bool:

+ return isinstance(o, DefaultPlaceholder) and o.value == self.value

+

+

+DefaultType = TypeVar("DefaultType")

+

+

+def Default(value: DefaultType) -> DefaultType:

+ """

+ You shouldn't use this function directly.

+

+ It's used internally to recognize when a default value has been overwritten, even

+ if the overriden default value was truthy.

+ """

+ return DefaultPlaceholder(value) # type: ignore

diff --git a/.venv/lib/python3.9/site-packages/fastapi/dependencies/__init__.py b/.venv/lib/python3.9/site-packages/fastapi/dependencies/__init__.py

new file mode 100644

index 0000000..e69de29

diff --git a/.venv/lib/python3.9/site-packages/fastapi/dependencies/__pycache__/__init__.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/dependencies/__pycache__/__init__.cpython-39.pyc

new file mode 100644

index 0000000..daf783a

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/dependencies/__pycache__/__init__.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/dependencies/__pycache__/models.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/dependencies/__pycache__/models.cpython-39.pyc

new file mode 100644

index 0000000..dfae577

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/dependencies/__pycache__/models.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/dependencies/__pycache__/utils.cpython-39.pyc b/.venv/lib/python3.9/site-packages/fastapi/dependencies/__pycache__/utils.cpython-39.pyc

new file mode 100644

index 0000000..db4e984

Binary files /dev/null and b/.venv/lib/python3.9/site-packages/fastapi/dependencies/__pycache__/utils.cpython-39.pyc differ

diff --git a/.venv/lib/python3.9/site-packages/fastapi/dependencies/models.py b/.venv/lib/python3.9/site-packages/fastapi/dependencies/models.py

new file mode 100644

index 0000000..443590b

--- /dev/null

+++ b/.venv/lib/python3.9/site-packages/fastapi/dependencies/models.py

@@ -0,0 +1,58 @@

+from typing import Any, Callable, List, Optional, Sequence

+

+from fastapi.security.base import SecurityBase

+from pydantic.fields import ModelField

+

+

+class SecurityRequirement:

+ def __init__(

+ self, security_scheme: SecurityBase, scopes: Optional[Sequence[str]] = None

+ ):

+ self.security_scheme = security_scheme

+ self.scopes = scopes

+

+

+class Dependant:

+ def __init__(

+ self,

+ *,

+ path_params: Optional[List[ModelField]] = None,

+ query_params: Optional[List[ModelField]] = None,

+ header_params: Optional[List[ModelField]] = None,

+ cookie_params: Optional[List[ModelField]] = None,

+ body_params: Optional[List[ModelField]] = None,

+ dependencies: Optional[List["Dependant"]] = None,

+ security_schemes: Optional[List[SecurityRequirement]] = None,

+ name: Optional[str] = None,

+ call: Optional[Callable[..., Any]] = None,

+ request_param_name: Optional[str] = None,

+ websocket_param_name: Optional[str] = None,

+ http_connection_param_name: Optional[str] = None,

+ response_param_name: Optional[str] = None,

+ background_tasks_param_name: Optional[str] = None,

+ security_scopes_param_name: Optional[str] = None,

+ security_scopes: Optional[List[str]] = None,

+ use_cache: bool = True,

+ path: Optional[str] = None,

+ ) -> None:

+ self.path_params = path_params or []

+ self.query_params = query_params or []

+ self.header_params = header_params or []

+ self.cookie_params = cookie_params or []

+ self.body_params = body_params or []

+ self.dependencies = dependencies or []

+ self.security_requirements = security_schemes or []

+ self.request_param_name = request_param_name

+ self.websocket_param_name = websocket_param_name

+ self.http_connection_param_name = http_connection_param_name

+ self.response_param_name = response_param_name

+ self.background_tasks_param_name = background_tasks_param_name

+ self.security_scopes = security_scopes

+ self.security_scopes_param_name = security_scopes_param_name

+ self.name = name

+ self.call = call

+ self.use_cache = use_cache

+ # Store the path to be able to re-generate a dependable from it in overrides

+ self.path = path

+ # Save the cache key at creation to optimize performance

+ self.cache_key = (self.call, tuple(sorted(set(self.security_scopes or []))))

diff --git a/.venv/lib/python3.9/site-packages/fastapi/dependencies/utils.py b/.venv/lib/python3.9/site-packages/fastapi/dependencies/utils.py

new file mode 100644

index 0000000..fcfaa2c

--- /dev/null

+++ b/.venv/lib/python3.9/site-packages/fastapi/dependencies/utils.py

@@ -0,0 +1,783 @@

+import asyncio

+import inspect

+from contextlib import contextmanager

+from copy import deepcopy

+from typing import (

+ Any,

+ Callable,

+ Dict,

+ List,

+ Mapping,

+ Optional,

+ Sequence,

+ Tuple,

+ Type,

+ Union,

+ cast,

+)

+

+from fastapi import params

+from fastapi.concurrency import (

+ AsyncExitStack,

+ _fake_asynccontextmanager,

+ asynccontextmanager,

+ contextmanager_in_threadpool,

+)

+from fastapi.dependencies.models import Dependant, SecurityRequirement

+from fastapi.logger import logger

+from fastapi.security.base import SecurityBase

+from fastapi.security.oauth2 import OAuth2, SecurityScopes

+from fastapi.security.open_id_connect_url import OpenIdConnect

+from fastapi.utils import create_response_field, get_path_param_names

+from pydantic import BaseModel, create_model

+from pydantic.error_wrappers import ErrorWrapper

+from pydantic.errors import MissingError

+from pydantic.fields import (

+ SHAPE_LIST,

+ SHAPE_SEQUENCE,

+ SHAPE_SET,

+ SHAPE_SINGLETON,

+ SHAPE_TUPLE,

+ SHAPE_TUPLE_ELLIPSIS,

+ FieldInfo,

+ ModelField,

+ Required,

+)

+from pydantic.schema import get_annotation_from_field_info

+from pydantic.typing import ForwardRef, evaluate_forwardref

+from pydantic.utils import lenient_issubclass

+from starlette.background import BackgroundTasks

+from starlette.concurrency import run_in_threadpool

+from starlette.datastructures import FormData, Headers, QueryParams, UploadFile

+from starlette.requests import HTTPConnection, Request

+from starlette.responses import Response

+from starlette.websockets import WebSocket

+

+sequence_shapes = {

+ SHAPE_LIST,

+ SHAPE_SET,

+ SHAPE_TUPLE,

+ SHAPE_SEQUENCE,

+ SHAPE_TUPLE_ELLIPSIS,

+}

+sequence_types = (list, set, tuple)

+sequence_shape_to_type = {

+ SHAPE_LIST: list,

+ SHAPE_SET: set,

+ SHAPE_TUPLE: tuple,

+ SHAPE_SEQUENCE: list,

+ SHAPE_TUPLE_ELLIPSIS: list,

+}

+

+

+multipart_not_installed_error = (

+ 'Form data requires "python-multipart" to be installed. \n'

+ 'You can install "python-multipart" with: \n\n'

+ "pip install python-multipart\n"

+)

+multipart_incorrect_install_error = (

+ 'Form data requires "python-multipart" to be installed. '

+ 'It seems you installed "multipart" instead. \n'

+ 'You can remove "multipart" with: \n\n'

+ "pip uninstall multipart\n\n"

+ 'And then install "python-multipart" with: \n\n'

+ "pip install python-multipart\n"

+)

+

+

+def check_file_field(field: ModelField) -> None:

+ field_info = field.field_info

+ if isinstance(field_info, params.Form):

+ try:

+ # __version__ is available in both multiparts, and can be mocked

+ from multipart import __version__ # type: ignore

+

+ assert __version__

+ try:

+ # parse_options_header is only available in the right multipart

+ from multipart.multipart import parse_options_header # type: ignore

+

+ assert parse_options_header

+ except ImportError:

+ logger.error(multipart_incorrect_install_error)

+ raise RuntimeError(multipart_incorrect_install_error)

+ except ImportError:

+ logger.error(multipart_not_installed_error)

+ raise RuntimeError(multipart_not_installed_error)

+

+

+def get_param_sub_dependant(

+ *, param: inspect.Parameter, path: str, security_scopes: Optional[List[str]] = None

+) -> Dependant:

+ depends: params.Depends = param.default

+ if depends.dependency:

+ dependency = depends.dependency

+ else:

+ dependency = param.annotation

+ return get_sub_dependant(

+ depends=depends,

+ dependency=dependency,

+ path=path,

+ name=param.name,

+ security_scopes=security_scopes,

+ )

+

+

+def get_parameterless_sub_dependant(*, depends: params.Depends, path: str) -> Dependant:

+ assert callable(

+ depends.dependency

+ ), "A parameter-less dependency must have a callable dependency"

+ return get_sub_dependant(depends=depends, dependency=depends.dependency, path=path)

+

+

+def get_sub_dependant(

+ *,

+ depends: params.Depends,

+ dependency: Callable[..., Any],

+ path: str,

+ name: Optional[str] = None,

+ security_scopes: Optional[List[str]] = None,

+) -> Dependant:

+ security_requirement = None

+ security_scopes = security_scopes or []

+ if isinstance(depends, params.Security):

+ dependency_scopes = depends.scopes

+ security_scopes.extend(dependency_scopes)

+ if isinstance(dependency, SecurityBase):

+ use_scopes: List[str] = []

+ if isinstance(dependency, (OAuth2, OpenIdConnect)):

+ use_scopes = security_scopes

+ security_requirement = SecurityRequirement(

+ security_scheme=dependency, scopes=use_scopes

+ )

+ sub_dependant = get_dependant(

+ path=path,

+ call=dependency,

+ name=name,

+ security_scopes=security_scopes,

+ use_cache=depends.use_cache,

+ )

+ if security_requirement:

+ sub_dependant.security_requirements.append(security_requirement)

+ sub_dependant.security_scopes = security_scopes

+ return sub_dependant

+

+

+CacheKey = Tuple[Optional[Callable[..., Any]], Tuple[str, ...]]

+

+

+def get_flat_dependant(

+ dependant: Dependant,

+ *,

+ skip_repeats: bool = False,

+ visited: Optional[List[CacheKey]] = None,

+) -> Dependant:

+ if visited is None:

+ visited = []

+ visited.append(dependant.cache_key)

+

+ flat_dependant = Dependant(

+ path_params=dependant.path_params.copy(),

+ query_params=dependant.query_params.copy(),

+ header_params=dependant.header_params.copy(),

+ cookie_params=dependant.cookie_params.copy(),

+ body_params=dependant.body_params.copy(),

+ security_schemes=dependant.security_requirements.copy(),

+ use_cache=dependant.use_cache,

+ path=dependant.path,

+ )

+ for sub_dependant in dependant.dependencies:

+ if skip_repeats and sub_dependant.cache_key in visited:

+ continue

+ flat_sub = get_flat_dependant(

+ sub_dependant, skip_repeats=skip_repeats, visited=visited

+ )

+ flat_dependant.path_params.extend(flat_sub.path_params)

+ flat_dependant.query_params.extend(flat_sub.query_params)

+ flat_dependant.header_params.extend(flat_sub.header_params)

+ flat_dependant.cookie_params.extend(flat_sub.cookie_params)

+ flat_dependant.body_params.extend(flat_sub.body_params)

+ flat_dependant.security_requirements.extend(flat_sub.security_requirements)

+ return flat_dependant

+

+

+def get_flat_params(dependant: Dependant) -> List[ModelField]:

+ flat_dependant = get_flat_dependant(dependant, skip_repeats=True)

+ return (

+ flat_dependant.path_params

+ + flat_dependant.query_params

+ + flat_dependant.header_params

+ + flat_dependant.cookie_params

+ )

+

+

+def is_scalar_field(field: ModelField) -> bool:

+ field_info = field.field_info

+ if not (

+ field.shape == SHAPE_SINGLETON

+ and not lenient_issubclass(field.type_, BaseModel)

+ and not lenient_issubclass(field.type_, sequence_types + (dict,))

+ and not isinstance(field_info, params.Body)

+ ):

+ return False

+ if field.sub_fields:

+ if not all(is_scalar_field(f) for f in field.sub_fields):

+ return False

+ return True

+

+

+def is_scalar_sequence_field(field: ModelField) -> bool:

+ if (field.shape in sequence_shapes) and not lenient_issubclass(

+ field.type_, BaseModel

+ ):

+ if field.sub_fields is not None:

+ for sub_field in field.sub_fields:

+ if not is_scalar_field(sub_field):

+ return False

+ return True

+ if lenient_issubclass(field.type_, sequence_types):

+ return True

+ return False

+

+

+def get_typed_signature(call: Callable[..., Any]) -> inspect.Signature:

+ signature = inspect.signature(call)

+ globalns = getattr(call, "__globals__", {})

+ typed_params = [

+ inspect.Parameter(